Table of Contents

Welcome to our blog where we delve into the exciting world of custom training for language translation models using Hugging Face Trainer. This post specifically focuses on training a pre-trained translation model for a Sanskrit-to-English translation task.

Our journey into this realm begins with exploring the M2M-100 language model, a groundbreaking development from Meta’s Facebook AI.

Meta’s Facebook AI has revolutionized the field of multilingual machine translation with the introduction of M2M-100. This pioneering model stands out as the first in its domain capable of translating between any pair of 100 languages without depending on English data. Notably, M2M-100 is an open-source triumph, allowing for widespread access and utilization.

What sets M2M-100 apart is its direct training approach on language pairs, such as Chinese to French, English to Mandarin, and regional languages such as Bengali, Hindi, Marathi, Nepali, Tamil, and Urdu. The actual languages supported are mentioned in the official hugging face repository of M2M 100 here.

In the following sections, we will guide you through the process of leveraging custom training a translation model for Sanskrit to English, using the resources and methodologies provided by Hugging Face Trainer. The dataset to be used for custom training Sanskrit to English Model is rahular/Itihasa. Stay tuned as we embark on this enlightening journey.

Installing Libraries

The first step is to install important python libraries which we are going to use in training and evaluating our Sanskrit to English Translation model.

!pip install sentencepiece !pip install transformers==4.28.0 !pip install datasets evaluate !pip install sacrebleu

Importing Libraries

Next is to import necessary libraries to use for custom training Sanskrit to English Model.

from transformers import AutoTokenizer, M2M100ForConditionalGeneration,Seq2SeqTrainingArguments, Seq2SeqTrainer, DataCollatorForSeq2Seq,pipeline from huggingface_hub import notebook_login from datasets import load_dataset,load_metric import evaluate import numpy as np

Loading Model & Tokenizer

Now, we will load the model and the tokenizer. The model we will use for training Sanskrit-English model is “facebook/m2m100_418M” which is Meta’s open source model with 418 Million parameters.

model = M2M100ForConditionalGeneration.from_pretrained("facebook/m2m100_418M")

tokenizer = AutoTokenizer.from_pretrained("facebook/m2m100_418M")Loading Dataset

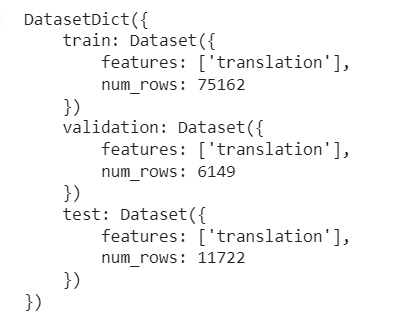

As we have downloaded the model and the associated tokenizer, now we will load the dataset i.e., “rahular/itihasa” on huggingface. Itihasa is a Sanskrit-English translation corpus containing 93,000 Sanskrit shlokas and their English translations extracted from M. N. Dutt’s seminal works on The Rāmāyana and The Mahābhārata. The digitized versions of these original texts are accessible at this location. Further information about the dataset, including its compilation method, can be found in this academic paper.

from datasets import load_dataset

dataset = load_dataset("rahular/itihasa")

dataset

Tokenization

Let’s take a closer look at a sample tokenization function used in this context.

The function tokenize_function is designed to operate on a batch of data, specifically a collection of translation pairs. Each batch contains Sanskrit and English texts extracted from the dataset. The function begins by separating the Sanskrit and English texts from each translation pair within the batch. This is achieved through list comprehensions that iterate over each entry in the batch’s ‘translation’ field, creating two separate lists: one for Sanskrit texts (sanskrit_texts) and another for their corresponding English translations (english_texts).

The next critical step is tokenizing these texts using a pre-defined tokenizer. This tokenizer is applied separately to both the Sanskrit texts (inputs) and the English texts (targets). The function calls the tokenizer with specific parameters: truncation is enabled to handle texts longer than the maximum length, padding is set to ‘max_length’ to ensure uniformity in sequence length, the maximum length is capped at 128 tokens, and the return type of tensors is specified as PyTorch tensors (‘pt’).

After tokenization, the function organizes the tokens into a structured format suitable for training a sequence-to-sequence (Seq2Seq) model. It returns a dictionary containing the following key-value pairs:

input_ids: The token IDs for the Sanskrit texts.attention_mask: The attention masks for the Sanskrit texts, indicating to the model which tokens should be focused on.decoder_input_ids: The token IDs for the English texts, serving as inputs to the decoder part of the Seq2Seq model.decoder_attention_mask: The attention masks for the English texts, guiding the decoder’s focus.labels: The labels for training the Seq2Seq model, which in this case are the token IDs of the target English texts. These are cloned from thedecoder_input_idsto be used in the model’s training process.

def tokenize_function(batch):

sanskrit_texts = [entry['sn'] for entry in batch['translation']]

english_texts = [entry['en'] for entry in batch['translation']]

# Tokenize the inputs (Sanskrit) and targets (English)

inputs = tokenizer(sanskrit_texts, truncation=True, padding='max_length', max_length=128, return_tensors="pt")

targets = tokenizer(english_texts, truncation=True, padding='max_length', max_length=128, return_tensors="pt")

# Return both inputs and targets tokens

return {

"input_ids": inputs["input_ids"],

"attention_mask": inputs["attention_mask"],

"decoder_input_ids": targets["input_ids"],

"decoder_attention_mask": targets["attention_mask"],

"labels": targets["input_ids"].clone() # labels for Seq2Seq models are typically the target input_ids

}

tokenized_datasets = dataset.map(tokenize_function, batched=True)

Transformers library, the DataCollatorForSeq2Seq plays a pivotal role in efficiently preparing batches of data for the sequence-to-sequence (Seq2Seq) model. The snippet shown initializes a data collator object specifically designed for Seq2Seq tasks. The DataCollatorForSeq2Seq class is imported from the transformers library, which is a widely used repository for state-of-the-art machine learning models.

This data collator is created by passing two essential arguments: tokenizer and model. The tokenizer is responsible for converting text into a format understandable by the model, while the model argument represents the Seq2Seq model that will be trained. By providing these two components, the data collator is effectively configured to process and prepare batches of data, ensuring they are appropriately tokenized and formatted to be compatible with the requirements of the Seq2Seq model.

from transformers import DataCollatorForSeq2Seq data_collator = DataCollatorForSeq2Seq(tokenizer=tokenizer, model=model)

Next we will import evaluation metrics sacrebleu and METEOR. Here, the sacrebleu metric is loaded using the evaluate.load method. SacreBLEU is a standard metric for evaluating machine translation quality. It compares the machine-generated translation against one or more reference translations, providing a score that reflects the translation’s accuracy and fluency.

The METEOR (Metric for Evaluation of Translation with Explicit ORdering) metric is loaded using the load_metric function. METEOR is another popular metric for evaluating machine translation. It differs from BLEU in that it considers factors like synonyms and stemming, aiming to produce a more nuanced assessment of translation quality.

import evaluate

from datasets import load_metric

metric = evaluate.load("sacrebleu")

meteor = load_metric('meteor')Postprocessing Function

The code snippet defines two functions, postprocess_text and compute_metrics, which are essential for evaluating the performance of a machine translation model.

postprocess_text(preds, labels): This function is designed to clean and prepare the predictions and labels for evaluation. It strips any leading and trailing spaces from both the model’s predictions (preds) and the ground truth labels (labels). The labels are further processed into a list of lists, where each inner list contains a single label.compute_metrics(eval_preds): The main function for computing evaluation metrics. It takes the raw predictions and labels from the model evaluation, processes them, and computes the performance metrics.- It first separates the predictions and labels.

- If the predictions are in a tuple format, it extracts only the necessary part.

- The predictions and labels are then decoded from their tokenized form back into text, skipping any special tokens (like padding or start/end tokens).

- The

postprocess_textfunction is applied to clean and format the decoded predictions and labels. - Evaluation metrics are computed using the processed predictions and labels. In this case, BLEU and METEOR scores are calculated, which are standard metrics for evaluating the quality of machine translations.

- The function also computes the average length of the predictions, which can be an indicator of the model’s verbosity or succinctness.

- Finally, it rounds the metric scores for easier interpretation and returns the results.

def postprocess_text(preds, labels):

preds = [pred.strip() for pred in preds]

labels = [[label.strip()] for label in labels]

return preds, labels

def compute_metrics(eval_preds):

preds, labels = eval_preds

if isinstance(preds, tuple):

preds = preds[0]

decoded_preds = tokenizer.batch_decode(preds, skip_special_tokens=True)

labels = np.where(labels != -100, labels, tokenizer.pad_token_id)

decoded_labels = tokenizer.batch_decode(labels, skip_special_tokens=True)

decoded_preds, decoded_labels = postprocess_text(decoded_preds, decoded_labels)

result = metric.compute(predictions=decoded_preds, references=decoded_labels)

meteor_result = meteor.compute(predictions=decoded_preds, references=decoded_labels)

prediction_lens = [np.count_nonzero(pred != tokenizer.pad_token_id) for pred in preds]

result = {'bleu' : result['score']}

result["gen_len"] = np.mean(prediction_lens)

result["meteor"] = meteor_result["meteor"]

result = {k: round(v, 4) for k, v in result.items()}

return resultTraining Configuration

The provided code snippet is configuring training arguments for a sequence-to-sequence (Seq2Seq) model using the Hugging Face Transformers library. This setup is crucial for defining how the model will be trained.

training_args = Seq2SeqTrainingArguments(

output_dir="M2M101",

evaluation_strategy="epoch",

learning_rate=2e-5,

per_device_train_batch_size=16,

per_device_eval_batch_size=16,

weight_decay=0.01,

save_total_limit=3,

num_train_epochs=5,

predict_with_generate=True,

fp16=True,

push_to_hub=True,

)

trainer = Seq2SeqTrainer(

model=model,

args=training_args,

train_dataset=tokenized_datasets["train"],

eval_dataset=tokenized_datasets["test"],

tokenizer=tokenizer,

data_collator=data_collator,

compute_metrics=compute_metrics,

)

# for starting the training of model

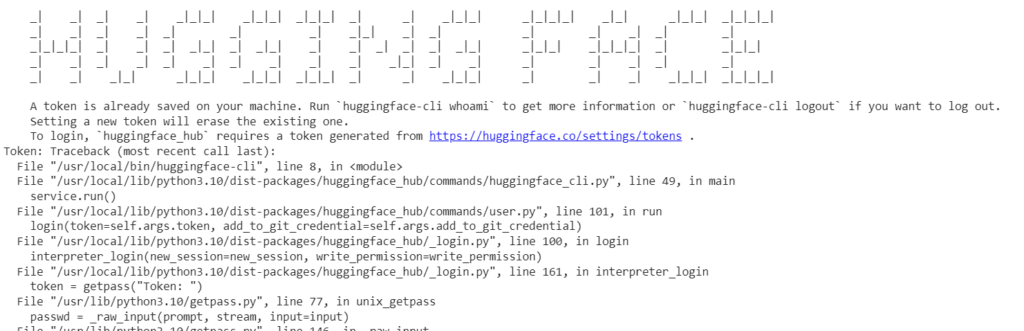

trainer.train()Now after executing the above step it will train the model. Next, in order to push the model to huggingface hub so that we can reuse later we have to login into huggingface cli.

!huggingface-cli login

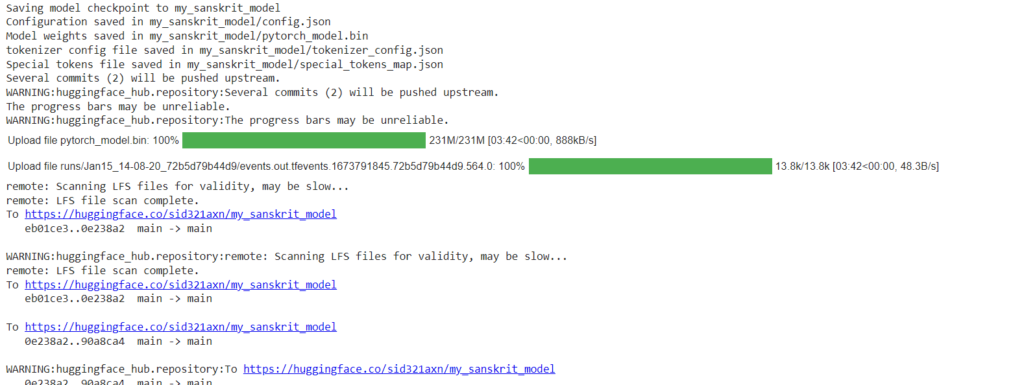

Next we will push the model to huggingface hub. in order to do so we have run following code.

trainer.push_to_hub()

Model Testing

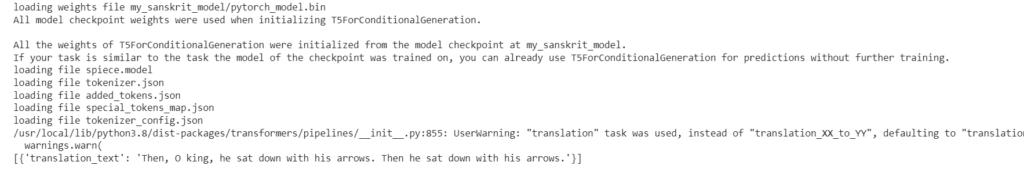

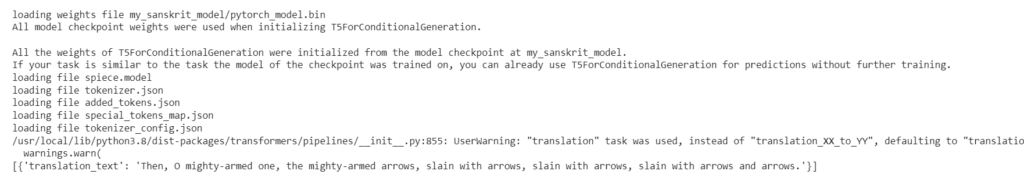

Now, we will test the model to see how well the model is doing the translation.

text = "सत्यमेवेश्वरो लोके सत्यं पद्माश्रिता सदा"

from transformers import pipeline

translator = pipeline("translation", model="my_sanskrit_model")

translator(text)

def translate_sankrit(text):

translator = pipeline("translation", model="my_sanskrit_model")

return translator(text)

translate_sankrit("क्रोधः प्रीतिं प्रणाशयति मानो विनयनाशनः । माया मित्त्राणि नाशयति लोभः सर्वविनाशनः ॥")

As you can see the model is able to do proper translation of complex sanskrit shloka into English.

Conclusion

In conclusion, the journey through custom training a translation model on Hugging Face, specifically for the task of translating Sanskrit to English, illuminates the remarkable capabilities of modern machine learning techniques. Leveraging the powerful M2M-100 model from Meta and employing meticulous data preprocessing, tokenization, and evaluation strategies, we have showcased a pathway to creating a highly effective translation tool. This model stands as a testament to the potential of AI in bridging language barriers, particularly for ancient and complex languages like Sanskrit. Its successful application in accurately translating Sanskrit texts not only opens doors to a wealth of historical and cultural knowledge but also demonstrates the vast potential of AI in the realm of language translation. The tools and techniques discussed here could be invaluable for scholars, linguists, and enthusiasts seeking to explore the rich tapestry of Sanskrit literature and its global significance, thereby contributing significantly to the preservation and understanding of cultural heritage.