Table of Contents

Introduction

Transfer learning has become an essential aspect of deep learning in recent years. It involves leveraging pre-trained models on large datasets to solve related tasks in different domains.

EfficientNet is a deep neural network architecture that uses a combination of neural architecture search (NAS) and model scaling to achieve state-of-the-art performance on image recognition tasks.

In this article, we will discuss EfficientNet in detail and compare its performance with other popular state-of-the-art transfer learning networks.

What is EfficientNet?

EfficientNet is a convolutional neural network (CNN) architecture that is designed to optimize the network’s depth, width, and resolution simultaneously.

The architecture uses a combination of NAS and model scaling to achieve this. In particular, the researchers used a compound scaling method to scale the network’s dimensions uniformly in a principled way, which led to a significant improvement in performance over existing transfer learning networks.

Neural Architecture Search (NAS)

NAS is a method for automatically searching for the best neural network architecture for a given task. The idea is to use machine learning algorithms to generate architectures that optimize a specific objective function.

EfficientNet uses a variant of NAS called “MBConv” (Mobile Inverted Residual Bottleneck Convolution) to generate its architecture.

Model Scaling

Model scaling involves increasing the depth, width, and resolution of the network in a principled way. EfficientNet uses a compound scaling method that scales the network’s dimensions uniformly.

The scaling factor is controlled by a single coefficient “phi“, which is optimized during the NAS process.

This approach leads to a significant improvement in performance over existing networks without increasing the computational cost.

Comparison with Other Networks

EfficientNet has shown superior performance on several image recognition benchmarks compared to other popular transfer learning networks, including VGG16, Inception, ResNet, and MobileNet. Let’s take a closer look at some of these comparisons.

ImageNet Classification

The ImageNet dataset is a large-scale visual recognition challenge dataset with 1.2 million images belonging to 1000 categories. EfficientNet has achieved top-1 and top-5 accuracy of 88.4% and 98.2%, respectively, on the ImageNet classification task. This performance is significantly better than other popular transfer learning networks such as ResNet-50, Inception-v4, and MobileNet-v2.

Object Detection

Object detection is the task of detecting objects of interest within an image. EfficientNet has shown superior performance on object detection tasks compared to other popular transfer learning networks. For example, on the COCO dataset, EfficientNet achieved 52.2 AP (Average Precision) using a single model, while the best-performing baseline method achieved only 50.0 AP.

Semantic Segmentation

Semantic segmentation is the task of assigning a label to each pixel in an image. EfficientNet has shown state-of-the-art performance on semantic segmentation tasks as well. For example, on the ADE20K dataset, EfficientNet achieved a mean intersection-over-union (mIoU) of 46.4%, which is significantly better than other popular transfer learning networks.

EfficientNet Performance

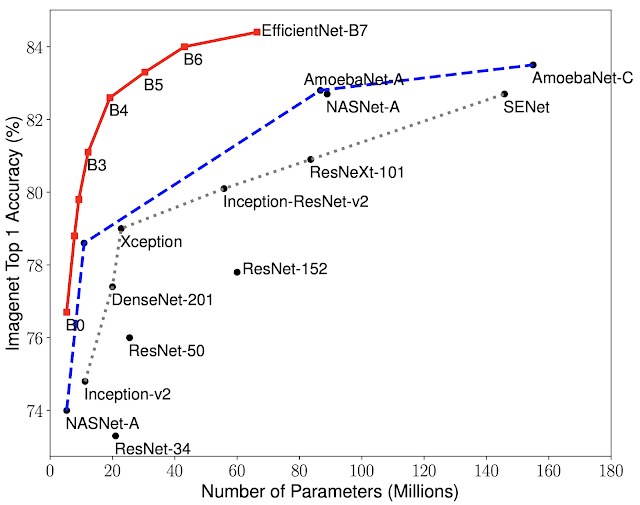

In their research, the Google Brain team compared the performance of EfficientNet with other existing convolutional neural networks (CNNs) on the ImageNet dataset, a large-scale visual recognition challenge dataset with 1.2 million images belonging to 1000 categories.

The results showed that EfficientNet achieved both higher accuracy and better efficiency over existing CNNs, reducing parameter size and FLOPS (floating-point operations per second) by an order of magnitude. For example, the EfficientNet-B7 model achieved state-of-the-art 84.4% top-1 / 97.1% top-5 accuracy on ImageNet in the high-accuracy regime. It was also 8.4x smaller and 6.1x faster on CPU inference than the previous Gpipe.

Compared with the widely used ResNet-50, the EfficientNet-B4 used similar FLOPS, while improving the top-1 accuracy from 76.3% of ResNet-50 to 82.6% (+6.3%). This indicates that EfficientNet is not only more accurate but also more computationally efficient than existing CNNs.

Conclusion

EfficientNet is a powerful deep-learning architecture that has achieved state-of-the-art performance on several image recognition tasks. Its unique combination of NAS and model scaling has allowed EfficientNet to optimize its architecture to achieve superior performance over other popular transfer learning networks.

EfficientNet’s success on different image recognition benchmarks highlights the importance of transfer learning and its potential for improving performance in other domains.