Table of Contents

This article is part of an ongoing NLP course series. In the previous article, we discussed POS tagging and also did the implementation in Python. In this article, we will explore the process of implementing both lexicon-based and rule based POS tagging on the Treebank corpus of NLTK. We will begin by exploring the corpus to gain a better understanding of its structure and the data it contains.

From there, we will delve into the implementation of the taggers, exploring the strengths and weaknesses of each method along the way.

When it comes to POS tagging, there are several methods that can be used to assign the appropriate tags to words in a text. One such method is the lexicon-based approach, which uses a statistical algorithm to assign the most frequently assigned POS tag to each token.

For instance, the tag “verb” may be assigned to the word “run” if it is used as a verb more often than any other tag.

Another approach is the rule-based method, which combines the lexicon-based approach with predefined rules. These rules are designed to handle specific cases that the lexicon-based approach may not be able to handle on its own.

For example, a rule-based tagger may change the tag to VBG for words ending with ‘-ing’, or change the tag to VBD for words ending with ‘-ed’. Defining such rules requires some exploratory data analysis and intuition

Implementation in Python

In this demonstration, we will focus on exploring these two techniques by using the WSJ (Wall Street Journal) POS-tagged corpus that comes with NLTK. By utilizing this corpus as the training data, we will build both a lexicon-based and a rule-based tagger.

This guided exercise will be divided into the following sections:

- Reading and understanding the tagged dataset

- Exploratory data analysis

- Lexicon and rule-based models:

- Creating and evaluating a lexicon POS tagger

- Creating and evaluating a rule-based POS tagger

Through this exercise, we aim to provide a comprehensive understanding of the lexicon-based and rule-based tagging techniques, as well as the importance of using appropriate training data for building accurate POS taggers.

Reading and Understanding Tagged Dataset

Let’s try to understand the tagged dataset by reading it from nltk.

before proceeding to explore the dataset, we first need to install the nltk library in Python

pip install nltk

Next, we will import the required libraries and read the dataset.

# Importing libraries import nltk import numpy as np import pandas as pd import pprint, time import random from sklearn.model_selection import train_test_split from nltk.tokenize import word_tokenize import math # reading the Treebank tagged sentences wsj = list(nltk.corpus.treebank.tagged_sents()) # samples: Each sentence is a list of (word, pos) tuples wsj[:3]

In the list mentioned above, each element corresponds to a sentence and is followed by a full stop ‘.’ which also serves as its POS tag. Therefore, the POS tag ‘.’ signifies the end of a sentence.

Furthermore, it is not necessary for the corpus to be segmented into sentences. Instead, we can use a list of tuples that contain both the word and its corresponding POS tag.

To accomplish this, we will convert the original list into a list of (word, tag) tuples.

# converting the list of sents to a list of (word, pos tag) tuples tagged_words = [tup for sent in wsj for tup in sent] print(len(tagged_words)) tagged_words[:10]

We now have a list of about 100676 (word, tag) tuples. Let’s now do some exploratory analysis.

Exploratory Data Analysis

Now, let’s perform some basic exploratory analysis to gain a better understanding of the tagged corpus. To begin, let’s ask some fundamental questions, such as:

- How many unique tags are present in the corpus?

- What is the most commonly occurring tag in the corpus?

- Which tag is most frequently assigned to the words “bank” and “executive”?

# question 1: Find the number of unique POS tags in the corpus # you can use the set() function on the list of tags to get a unique set of tags, # and compute its length tags = [pair[1] for pair in tagged_words] unique_tags = set(tags) len(unique_tags)

46

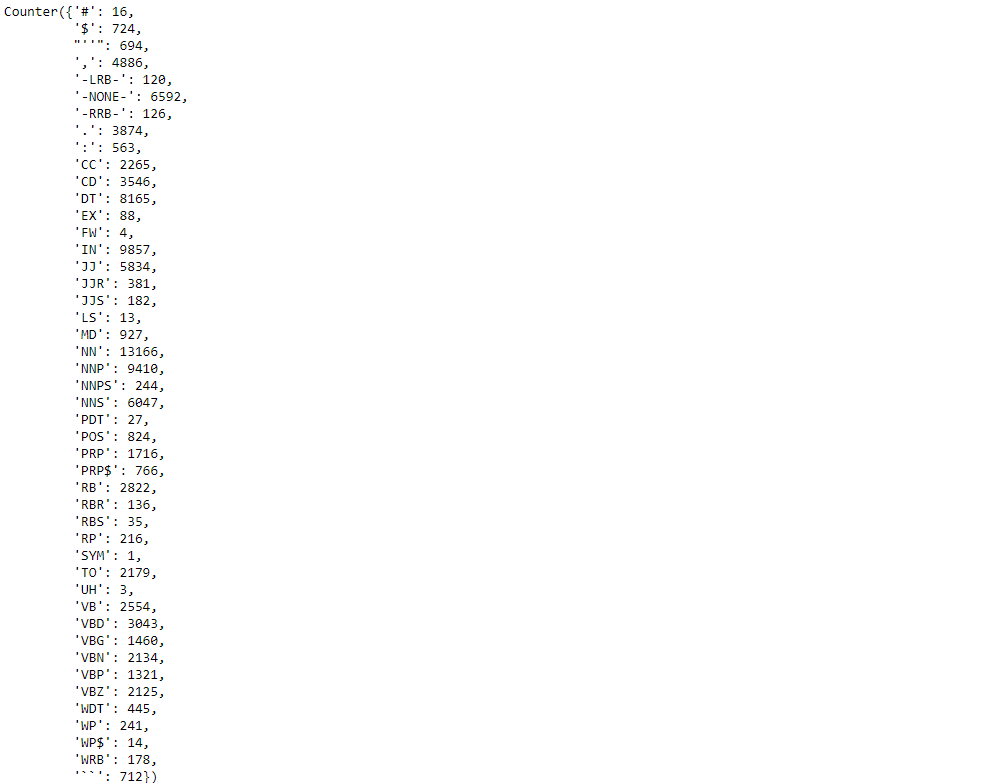

# question 2: Which is the most frequent tag in the corpus # to count the frequency of elements in a list, the Counter() class from collections # module is very useful, as shown below from collections import Counter tag_counts = Counter(tags) tag_counts

# the most common tags can be seen using the most_common() method of Counter tag_counts.most_common(5)

[('NN', 13166), ('IN', 9857), ('NNP', 9410), ('DT', 8165), ('-NONE-', 6592)]Thus, NN is the most common tag followed by IN, NNP, DT, -NONE- etc.

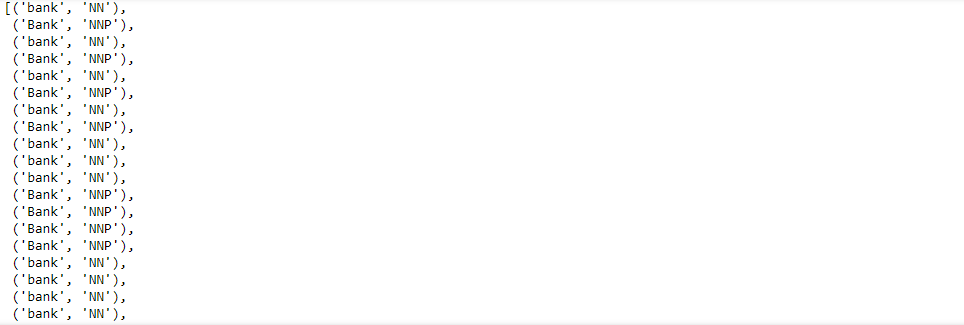

# question 3: Which tag is most commonly assigned to the word "bank". bank = [pair for pair in tagged_words if pair[0].lower() == 'bank'] bank

# question 3: Which tag is most commonly assigned to the word "executive". executive = [pair for pair in tagged_words if pair[0].lower() == 'executive'] executive

Lexicon and Rule-Based Models for POS Tagging

Let’s now see lexicon and rule-based models for POS tagging. We’ll first split the corpus into training and test sets and then use built-in NLTK taggers.

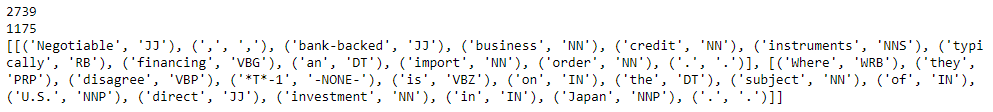

Splitting into Train and Test Sets

# splitting into train and test random.seed(1234) train_set, test_set = train_test_split(wsj, test_size=0.3) print(len(train_set)) print(len(test_set)) print(train_set[:2])

Lexicon (Unigram) Tagger

Let’s now try training a lexicon (or a unigram) tagger which assigns the most commonly assigned tag to a word.

In NLTK, the UnigramTagger() can be used to train such a model.

# Lexicon (or unigram tagger) unigram_tagger = nltk.UnigramTagger(train_set) unigram_tagger.evaluate(test_set)

0.870780747954553

As we can see with an accuracy of 87%, even a simple unigram tagger seems to perform well.

Rule-Based (Regular Expression) Tagger

Let’s now move on to building a rule-based tagger, which utilizes regular expressions. In NLTK, we can use the RegexpTagger() to provide handwritten regular expression patterns for our tagger.

For example, we can specify regexes for various grammatical forms such as gerunds and past tense verbs, 3rd singular present verbs (e.g., creates, moves, makes), modal verbs (e.g., should, would, could), possessive nouns (e.g., partner’s, bank’s), plural nouns (e.g., banks, institutions), cardinal numbers (CD), and so on. In case none of these rules are applicable to a word, we can assign the most frequent tag NN to it.

# specify patterns for tagging

# example from the NLTK book

patterns = [

(r'.*ing$', 'VBG'), # gerund

(r'.*ed$', 'VBD'), # past tense

(r'.*es$', 'VBZ'), # 3rd singular present

(r'.*ould$', 'MD'), # modals

(r'.*\'s$', 'NN$'), # possessive nouns

(r'.*s$', 'NNS'), # plural nouns

(r'^-?[0-9]+(.[0-9]+)?$', 'CD'), # cardinal numbers

(r'.*', 'NN') # nouns

]

regexp_tagger = nltk.RegexpTagger(patterns)

regexp_tagger.evaluate(test_set)0.21931829474311834

As we can see that independent rule-based tagger fails to get higher accuracy.

Combining Taggers

Let’s explore the possibility of combining the taggers we created earlier. As we saw earlier, the rule-based tagger on its own is not very effective due to the limited number of rules we have written. However, by combining the lexicon and rule-based taggers, we have the potential to create a tagger that performs better than either of the individual ones.

NLTK provides a convenient method to combine taggers using the ‘backup’ argument. In the following code, we create a regex tagger to act as a backup to the lexicon tagger. In other words, if the lexicon tagger is unable to tag a word (e.g., a new word not in the vocabulary), it will use the rule-based tagger to assign a tag. Additionally, note that the rule-based tagger itself is backed up by the ‘NN’ tag.

# rule based tagger rule_based_tagger = nltk.RegexpTagger(patterns) # lexicon backed up by the rule-based tagger lexicon_tagger = nltk.UnigramTagger(train_set, backoff=rule_based_tagger) lexicon_tagger.evaluate(test_set)

0.9049985093908377

So, as we can observe by combining the taggers our accuracy is increased to 90.49% even higher than the lexicon-based tagger.

Conclusion

In this article, we explored the Treebank dataset and implemented lexicon-based and rule-based taggers in Python. We began by understanding the importance of POS tagging and its applications in natural language processing. We then explored the Treebank dataset and performed a basic exploratory analysis to gain insights into the dataset.

Subsequently, we implemented the lexicon-based tagger and the rule-based tagger separately. While both taggers performed reasonably well, we observed that the rule-based tagger was not very effective on its own due to the limited number of rules. However, when we combined the lexicon-based and rule-based taggers using the ‘backup’ argument, we achieved a much better performance than either of the individual models.

Overall, this article provides a comprehensive understanding of POS tagging, the Treebank dataset, and the implementation of lexicon-based and rule-based taggers in Python. We hope that this article will prove to be a useful resource for those interested in natural language processing and POS tagging.