Table of Contents

Artificial Intelligence (AI) has come a long way since the inception of deep learning. In the field of computer vision, ResNet-50 has emerged as one of the most popular and efficient deep neural networks.

It is capable of achieving state-of-the-art results in a wide range of image-related tasks such as object detection, image classification, and image segmentation.

In this article, we will delve into ResNet-50’s architecture, skip connections, and its advantages over other networks.

What is ResNet-50?

ResNet-50 is a type of convolutional neural network (CNN) that has revolutionized the way we approach deep learning. It was first introduced in 2015 by Kaiming He et al. at Microsoft Research Asia.

ResNet stands for residual network, which refers to the residual blocks that make up the architecture of the network.

ResNet-50 is based on a deep residual learning framework that allows for the training of very deep networks with hundreds of layers.

The ResNet architecture was developed in response to a surprising observation in deep learning research: adding more layers to a neural network was not always improving the results.

This was unexpected because adding a layer to a network should allow it to learn at least what the previous network learned, plus additional information.

To address this issue, the ResNet team, led by Kaiming He, developed a novel architecture that incorporated skip connections.

These connections allowed the preservation of information from earlier layers, which helped the network learn better representations of the input data. With the ResNet architecture, they were able to train networks with as many as 152 layers.

The results of ResNet were groundbreaking, achieving a 3.57% error rate on the ImageNet dataset and taking first place in several other competitions, including the ILSVRC and COCO object detection challenges.

This demonstrated the power and potential of the ResNet architecture in deep learning research and applications.

Let’s look at the basic architecture of the ResNet-50 network.

ResNet-50 Architecture

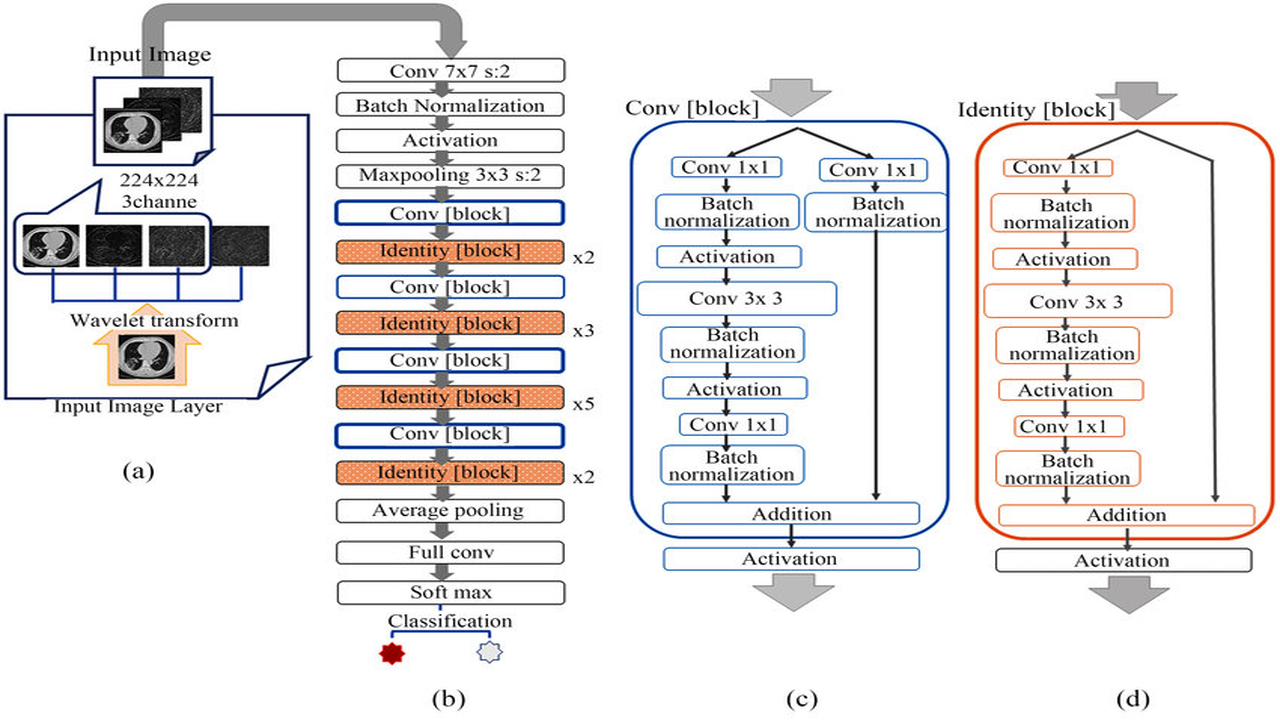

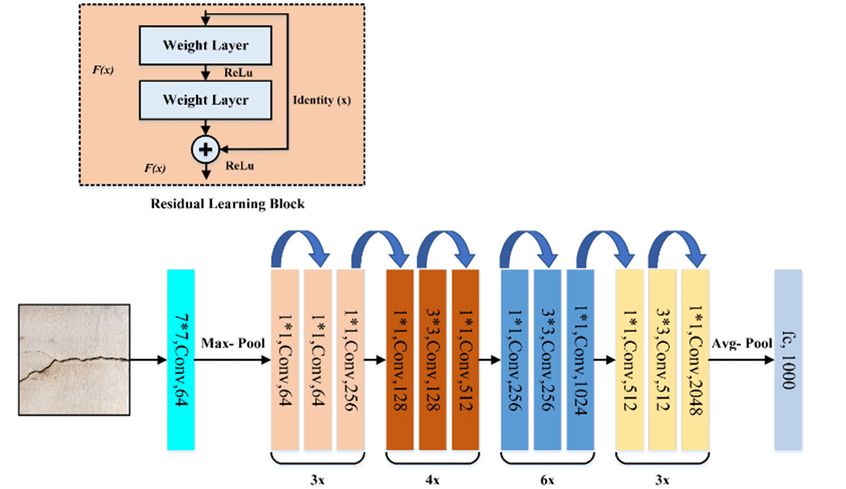

ResNet-50 consists of 50 layers that are divided into 5 blocks, each containing a set of residual blocks. The residual blocks allow for the preservation of information from earlier layers, which helps the network to learn better representations of the input data.

The following are the main components of ResNET.

1. Convolutional Layers

The first layer of the network is a convolutional layer that performs convolution on the input image. This is followed by a max-pooling layer that downsamples the output of the convolutional layer. The output of the max-pooling layer is then passed through a series of residual blocks.

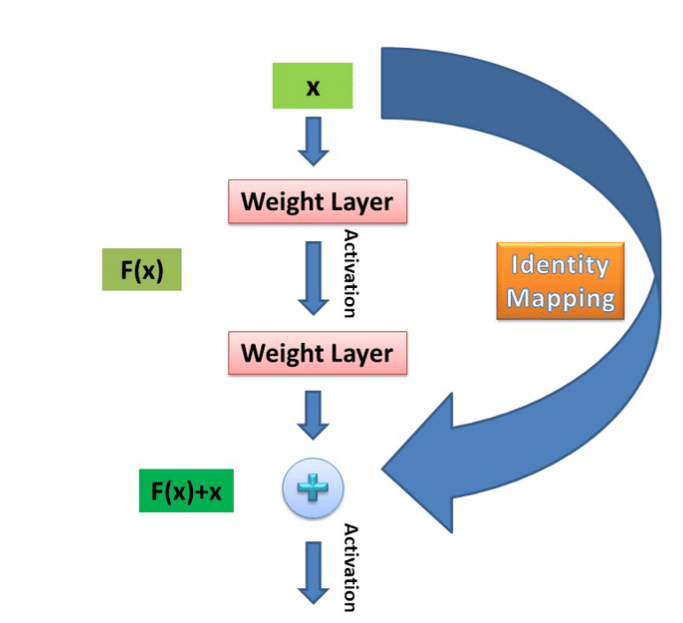

2. Residual Blocks

Each residual block consists of two convolutional layers, each followed by a batch normalization layer and a rectified linear unit (ReLU) activation function. The output of the second convolutional layer is then added to the input of the residual block, which is then passed through another ReLU activation function. The output of the residual block is then passed on to the next block.

3. Fully Connected Layer

The final layer of the network is a fully connected layer that takes the output of the last residual block and maps it to the output classes. The number of neurons in the fully connected layer is equal to the number of output classes.

Concept of Skip Connection

Skip connections, also known as identity connections, are a key feature of ResNet-50.

They allow for the preservation of information from earlier layers, which helps the network to learn better representations of the input data.

Skip connections are implemented by adding the output of an earlier layer to the output of a later layer.

Key Features of ResNet-50

- ILSVRC’15 classification winner (3.57% top 5 error)

- 152 layer model for ImageNet

- Has other variants also (with 35, 50, 101 layers)

- Every ‘residual block‘ has two 3×3 convolution layers

- No FC layer, except one last 1000 FC softmax layer for classification

- Global average pooling layer after the last convolution

- Batch Normalization after every convolution layer

- SGD + momentum (0.9)

- No dropout used

Advantages of ResNet-50 Over Other Networks

ResNet-50 has several advantages over other networks. One of the main advantages is its ability to train very deep networks with hundreds of layers.

This is made possible by the use of residual blocks and skip connections, which allow for the preservation of information from earlier layers.

Another advantage of ResNet-50 is its ability to achieve state-of-the-art results in a wide range of image-related tasks such as object detection, image classification, and image segmentation.

Conclusion

In conclusion, ResNet-50 is a powerful and efficient deep neural network that has revolutionized the field of computer vision. Its architecture, skip connections, and advantages over other networks make it an ideal choice for a wide range of image-related tasks.

FAQs

What is ResNet-50?

ResNet-50 is a type of convolutional neural network that is based on a deep residual learning framework.

What are residual blocks in ResNet-50?

Residual blocks are a set of layers that allow for the preservation of information from earlier layers, which helps the network to learn better representations of the input

data.

What are skip connections in ResNet-50?

Skip connections, also known as identity connections, allow for the preservation of information from earlier layers by adding the output of an earlier layer to the output of a later layer.

What are the advantages of ResNet-50?

ResNet-50 has several advantages, including the ability to train very deep networks with hundreds of layers, achieve state-of-the-art results in a wide range of image-

related tasks, and the use of skip connections for the preservation of information from earlier layers.

How does ResNet-50 compare to other networks?

ResNet-50 has been shown to outperform other networks in a wide range of image-related tasks, including object detection, image classification, and image segmentation. Its use of residual blocks and skip connections make it a powerful and efficient choice for deep learning in computer vision.

What is the significance of skip connections in ResNet-50?

Skip connections allow for the preservation of information from earlier layers, which helps the network to learn better representations of the input data. This is particularly important for very deep networks, as it can help to prevent the vanishing gradient problem that can occur during training.

How does ResNet-50 achieve state-of-the-art results?

ResNet-50 achieves state-of-the-art results by using residual blocks and skip connections, which allow for the preservation of information from earlier layers. This

enables the network to learn better representations of the input data and make more accurate predictions.

Can ResNet-50 be used for tasks other than image-related tasks?

While ResNet-50 is primarily used for image-related tasks, it can also be adapted for use in other domains such as natural language processing (NLP) and speech

recognition. However, it may require some modifications to its architecture to achieve optimal performance in these domains.

What are some potential drawbacks of ResNet-50?

One potential drawback of ResNet-50 is that it may be more computationally expensive to train and run compared to other networks. Additionally, its architecture may not be well-suited for certain types of data or tasks, and it may require significant modifications or customization to achieve optimal performance in these scenarios.

Are there any variations of ResNet-50?

Yes, there are several variations of ResNet-50, including ResNet-101, ResNet-152, and ResNet-200, which contain more layers than the original ResNet-50 architecture.

Additionally, there are also variations that incorporate different types of blocks, such as the bottleneck block used in ResNet-101.