Table of Contents

In this article, we will explore the topic of dependency parsing in NLP, which is a crucial technique used for analyzing the grammatical structure of sentences.

What is Dependency Parsing?

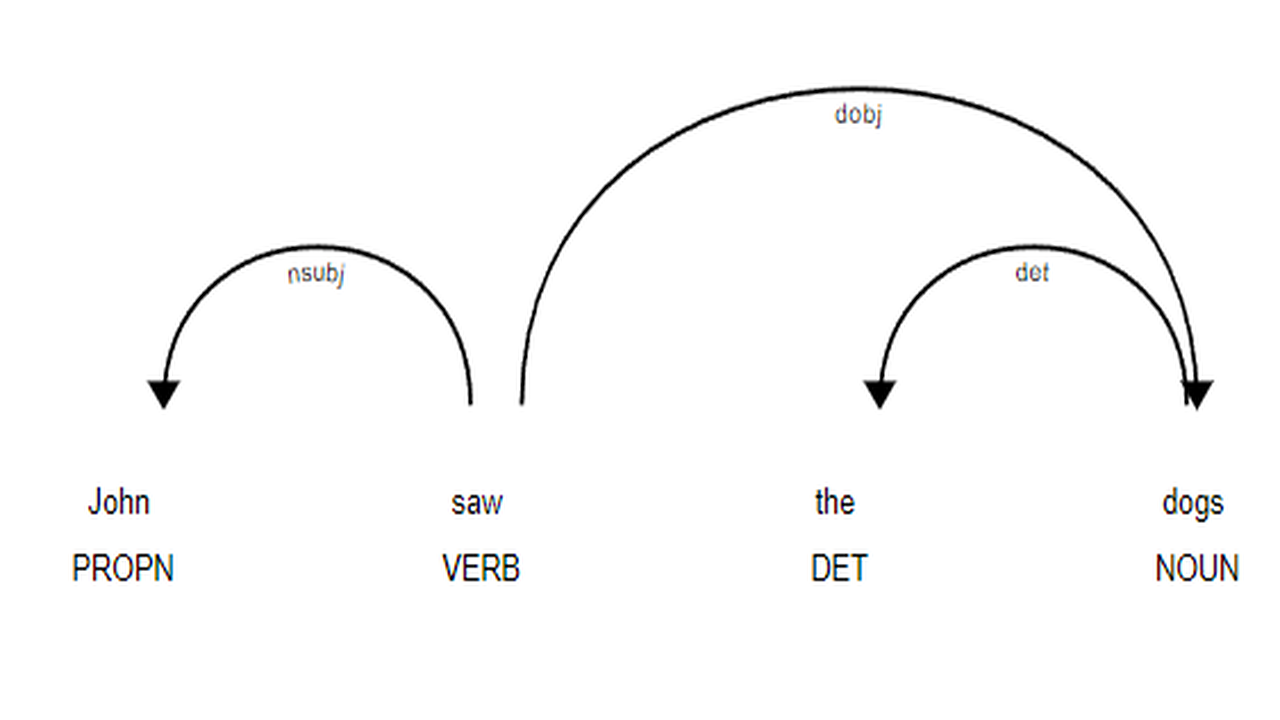

Dependency parsing is a technique used in natural language processing for analyzing the grammatical structure of sentences.

It involves identifying the relationships between words in a sentence and representing them in the form of a dependency tree.

In a dependency tree, the words are represented as nodes, and the dependencies between them are represented as edges.

Dependency parsing is a crucial step in many NLP tasks, such as text classification, sentiment analysis, and machine translation. It is widely used in applications such as chatbots, virtual assistants, and search engines.

Dependency Parsing Techniques

There are two main techniques used for dependency parsing: Transition-Based Parsing and Graph-Based Parsing.

Transition-Based Parsing

Transition-based parsing is a machine learning-based approach to dependency parsing. It involves predicting a sequence of actions to build a dependency tree. These actions include shifting a word onto the stack, reducing the stack by creating a dependency between the top two words, or creating a new dependency by combining two existing dependencies.

Graph-Based Parsing

Graph-based parsing involves building a graph of the sentence where nodes represent words, and edges represent the dependencies between them. The graph is then analyzed using algorithms to identify the most likely dependency tree.

Examples of Dependency Parsing

Let’s look at some examples of dependency parsing to gain a better understanding of this technique.

Example 1: “Man sits on the bench“

“Man sits on the bench,” the word “Man” is the subject (the one doing something), “sits” is the main verb (what is being done), and “bench” is the object (on whom something is being done).

Dependency parsing focuses on the relationships between individual words rather than phrases or larger structures.

The basic idea is that every sentence has a subject, a verb, and an object. In present-day English, the typical word order for these elements is subject-verb-object (SVO).

While many sentences may be more complex than this, advanced dependency parsing techniques can still analyze them effectively.